I have long had an obsession with PC building. When I was a school kid, my dad picked a PC he liked, and I had to live with it. I made it work even after multiple component failures by fixing things myself until I went to college. It then made more sense to use laptops, and I kept using laptops exclusively until 2023. I had forgotten that I wanted to build a PC someday.

One fine day, my brother, Krushan, asked me what I considered my primary business and what I considered my secondary business. I replied that software engineering was the primary and finance/investments was the secondary business. He asked me how much money I had invested in markets and how much my laptop was worth. As it turned out, I had invested significantly more in my secondary business and under-invested in my primary business. I liked his line of questioning and the irony of the situation. This is when I decided that I had to build a PC. Ever since I have worked with computers, I had to use minimalistic OSes, minimalistic window managers, minimalistic text editors because I was operating on genuine constraints which is one of the best software education one can have. But now I wanted to splurge and get the very best consumer hardware money can buy (hello 24C/32T).

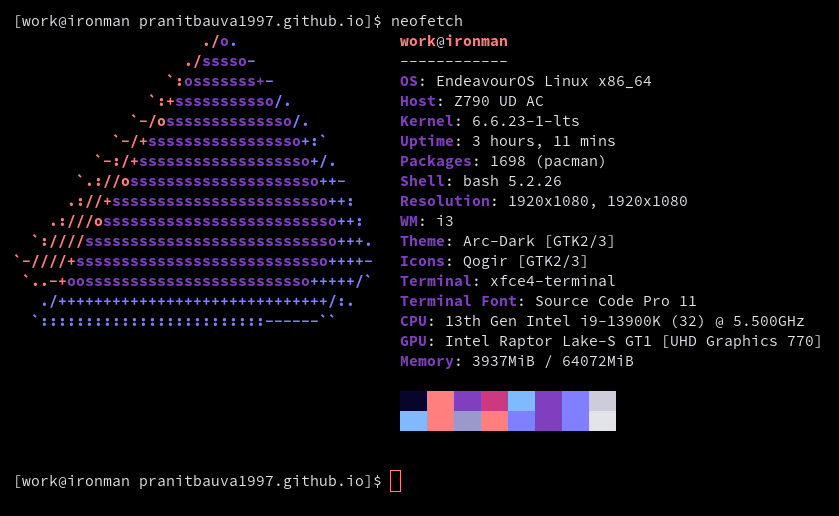

And so I did, here are the specs:

Also a google sheet if one wishes to know the exact components.

I like to give my dev machines cool names. For the PC, it is ironman and my ThinkPad X1 carbon is thor. Not so surprisingly, my minimalistic habits didn't just vanish. I continue using the PC the same way I use a laptop, but now I can run local LLMs on it. I only run Arch Linux on my dev machines and Debian on servers.

If you're wondering what's so "home lab" about this, hang on and read further. Building a PC is how I was introduced to home labbing, which is why it is the back story.

Sometime in the summer of 2022, I wrote a Python script to scrape stock prices, bulk/block deals, short selling info from NSE website, put it in a lambda function, made it store data in RDS and set some cron jobs using AWS EventBridge. I found having this data very useful when I had decided I wanted to buy or sell a stock and was figuring out how much to buy/sell and whether to do it today/tomorrow, or a week from now.

Somewhere in 2024, this started failing because NSE started blocking AWS CIDR blocks and all requests were timing out. I also had some more things I wanted to run, explicitly scraping all company announcements and making them searchable. I accidentally stumbled upon refurbished ThinkCentre on Amazon India and it was found to be relatively cheap. Also, there is no way NSE was going to block my ISP since half of Indians wouldn't be able to access the website if they did. This is how I ended up buying three refurbished ThinkCenters.

Specs of the home lab:

- 4 cores * 3 machines (i5 6600T, i5 6500T, i5 6400T) = 12 cores

- 32 GB RAM * 3 = 96 GB

- 2 TB (Crucial MX500) + 4 TB (Samsung 870 evo) + 256 GB NVme (Hynix) ~ 5.5 TB (effective)

I bought a gigabit switch and did the internal wiring in my home to ensure everything had ethernet access. Also, I had to replace the heat sink in all those machines. Since I didn't have to expose anything I ran to anyone other than me, I didn't have to take a static IP or worry about setting up a firewall. I could run this on my private network. There are still many times I would be out of my home when I needed to access it, and Mukul Mehta had earlier introduced me to Tailscale, which I love now.

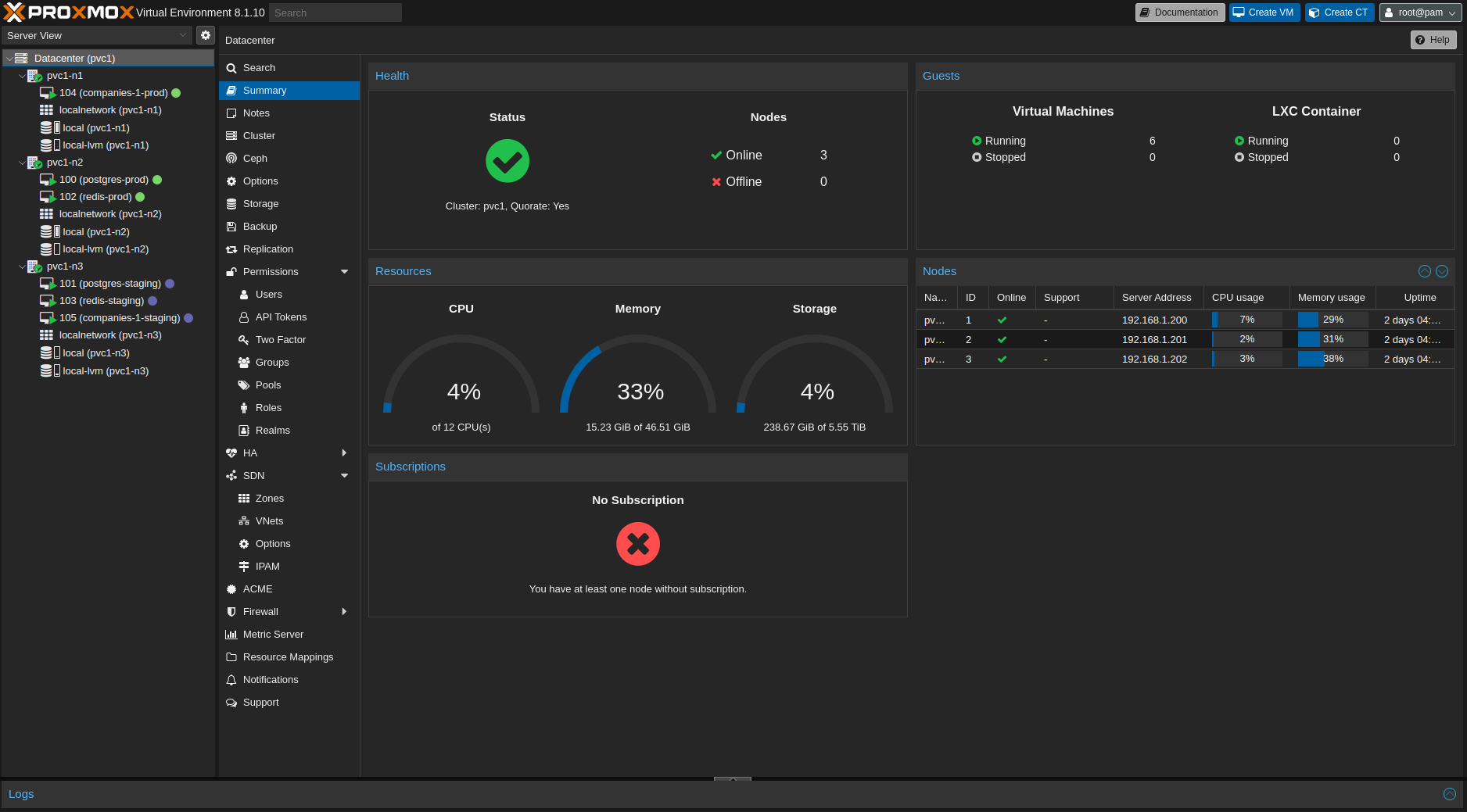

I read about how other people were running home labs and Proxmox kept coming up again and again. I tried it, loved it, and got hooked on it. I set the Proxmox cluster with all three machines, installed Tailscale on them, setup the networking bits of assigning static DHCP addresses from the ISP router, and I was good to go.

Coming to what I run on my home lab:

- I exclusively use VMs instead of containers since RAM is cheap but dev time is expensive

- I exclusively run Debian on all my servers (Proxmox is also Debian based)

- Setup 2 postgres with timescale VMs, one for prod and another for staging

- Setup 2 redis VMs, one for prod and another for staging

- Setup 2 Debian VMs, one for prod and another for staging, which will run containers managed by docker-compose and systemd

- All VMs run tailscale

- Forward all logs to Sematext

- Setup backups schedule on Proxmox

I have a Python-exclusive stack where I use celery workers, beat, flower, FastAPI, pydantic, psycopg and tesseract. I have never liked ORMs, and I still raw dog SQL. I deploy stuff using Makefiles, which SSH and SCP into machines. Have some linters set up as Github actions.

Usually, home labs have minimal CPU usage, but that's not my case specifically because I run Tesseract to extract text from NSE's corporate announcements which are in the form of pdf. One of the big problems with using refurbished ThinkCenters is the heat issue, which no one has spoken about in the context of home labs. I have seen people stack ThinkCenters on top of one another, which would cause heat problems. It would be better to keep some spacing between them. I also bought Gaiatop USB fans; the heat issue is now resolved. I don't have to keep this running 24x7 since the actual scraping jobs take about 4-5 hrs. One of the problems I am facing is the dust issue, so I must keep cleaning it regularly. Usually, I keep Sundays for maintenance and downtime.

Some improvements I would like to make:

- Getting a Synology NAS to store backups outside of Proxmox

- Scraping more things and expanding the scope of the project

One thing I would like to point out to people who might be planning to run home labs in future:

I could never relate to running your own DNS server or going to self-hosted and figuring out what I could run. I also have weak opinions on whether cloud providers should have your data (I use Google One for syncing files). I don't watch many movies either, and I would rather download them from my dev machines if I do watch them. I don't want to run my mail server. But I feel that home labs should scratch your itch and help you automate a part of your life. This is how I learned programming, which is the best way to get started.

I do like to sync my Calibre library across devices, which is done through Google Drive sync (I can't use calibre on two machines together and don't need to). I also like to sync my notes through Gdrive, and I use QOwnNotes since I wanted a non-electron-based app.

Note: I bought Insync for smooth syncing experience with GDrive on Linux.

Here is a photo of the rest of the desk from where I work:

Some Reddit communities I frequent regarding this: