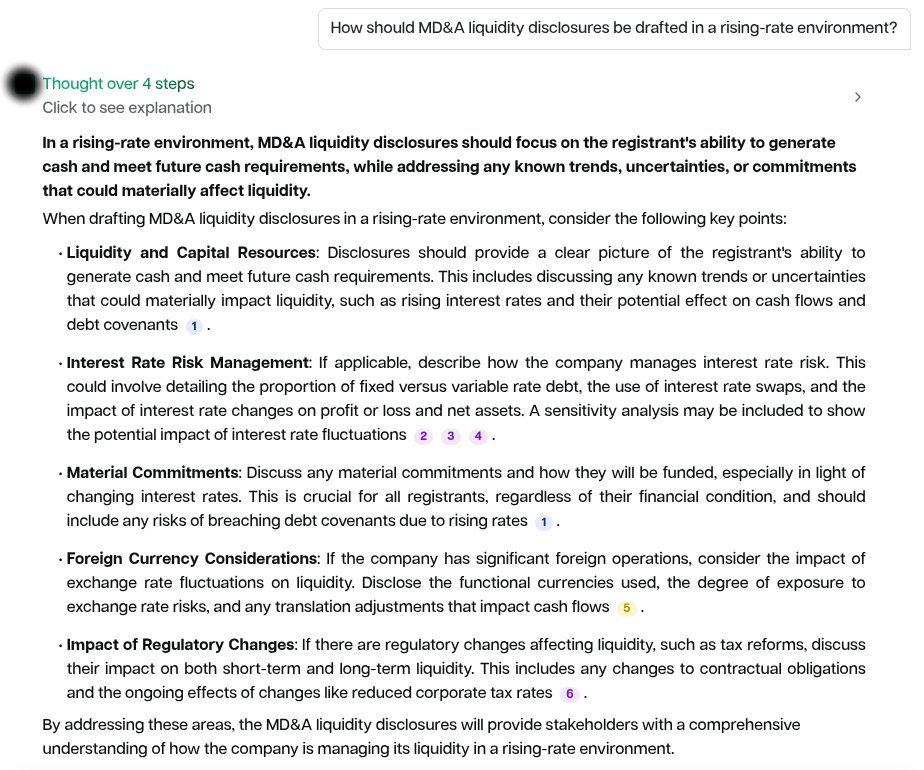

One of the hardest things while ripping an old workflow executed by human intelligence you trust with "something AI" is the mistake of omission, i.e. what human intelligence would have done that AI didn't. Let me explain using an example. I was trying out a product built for accounting and financial reporting teams of publicly listed companies. I asked the question (which was available in the sample questions to ask), "How should management discussions and analysis liquidity disclosures be drafted in a rising-rate environment?"

Take a pause, look at the answer and think what's missing? If you were an investor in the company supposed to report these disclosures, what information would you expect from the management team?

The answer looks right, seems plausible, but is still unusable.

What is missed: the maturity profile of liabilities. When money is expensive and scarce, the first thing investors want to see is when your debt comes due. If a large chunk matures next year, refinancing gets harder and costlier; if markets shut, you need cash you may not have. Seven years out? Different story.

My workflow goal: 3 hours → 30 minutes (without losing the point)

I’ve built an AI workflow to cut quarterly report analysis from ~3 hours to ~30 minutes. It only helps if it surfaces what I expect it to surface. Personally, I tolerate verbosity; I don’t tolerate omission. Others might be the reverse, that preference should be configurable, not accidental. I had to invest so much time in doing the same task manually that I asked AI to do and keep benchmarking where AI outperformed me and where I outperformed AI and then keep making tweaks to close the gap.

Why automated evals aren’t enough (and where they shine)

Automated evals mostly check correctness on known questions. Omission is a coverage problem. So:

- Human evals by domain experts are how you establish trust

- Automated evals are how you prevent regressions once you’ve defined what “coverage” means

Treat automated evals as regression tests, not trust tests.

If you're building AI tools

- Run regular human evals on real user queries

- Convert recurring expectations into checklists and regression tests

- Track a simple rubric: coverage, specificity, decision-usefulness, omission risk

- Only then optimize for speed or style

PS. I’ve probably spent 500+ hours so that 3-hour tasks take 30 minutes. Not fully recouped yet. But like my investment in Vim/Neovim tinkering 4 years back, I am hopeful this will pay off huge dividends. For core portfolio names, I still do both runs (manual and AI) to watch for quality drift; for competitors, I use the 30-minute AI pass exclusively.